DoReFa-Net: Training Low Bitwidth Convolutional Neural Networks with Low Bitwidth Gradients

2024-01-12

Keywords: #Quantization

0. Abstract

- Proposal: A method to train NNs that have low bitwidth weights/ activations using low bitwidth parameter gradients.

1. Introduction

- BNN, XNOR-Net: Both weights and activations of conv layers are binarized. → Computationally expensive convolutions can be done by bitwise operation kernels during forward pass.

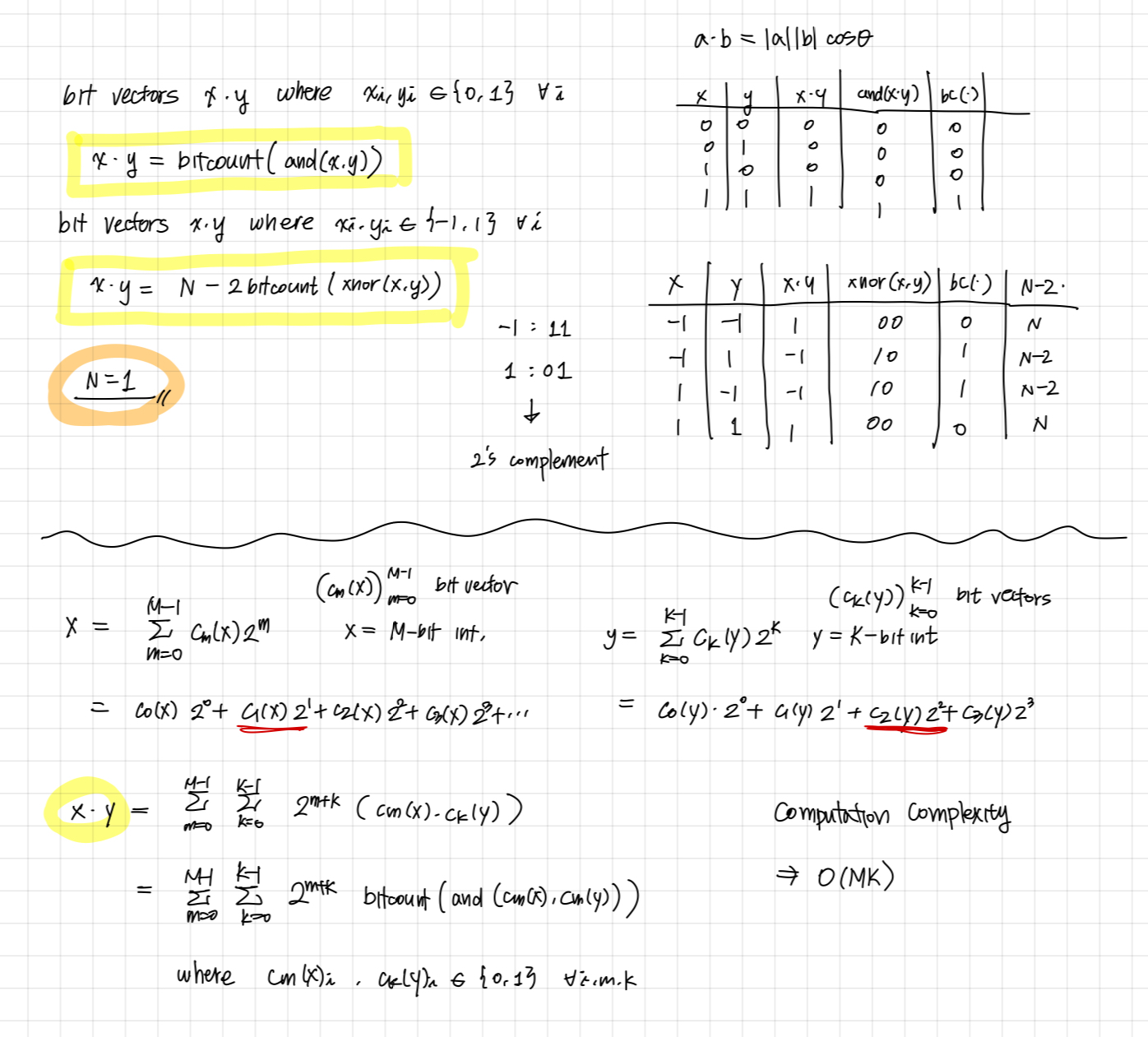

- Dot product of bit vectors $x$ and $y$ using bitwise op.

- When $x$ and $y$ are vectors of ${-1, 1}$,

- No previous work has succeeded in quantizing gradients to numbers with bitwidth less than 8 during backward pass.

- Contributions

- DoReFa-Net: Generalize the method of binarized NN, bit convolution kernels to accelerate both forward and backward pass of the training process.

- Bit convolution can be implemented on CPU, FPGA, ASIC and GPU. Considerably reduces energy consumption of low bitwidth NN training

- Quantization sensitivity: gradients > activations > weights

2. DoReFa-Net

- A method to train NN that has low bw(bitwidth) weights/activation with low bw parameter gradients.

- While weights/activations can be deterministically quantized, gradients need to be stochastically quantized.

2.1 Using Bit Convolution Kernels in Low Bitwidth NN

- Computation complexity: $O(MK)$

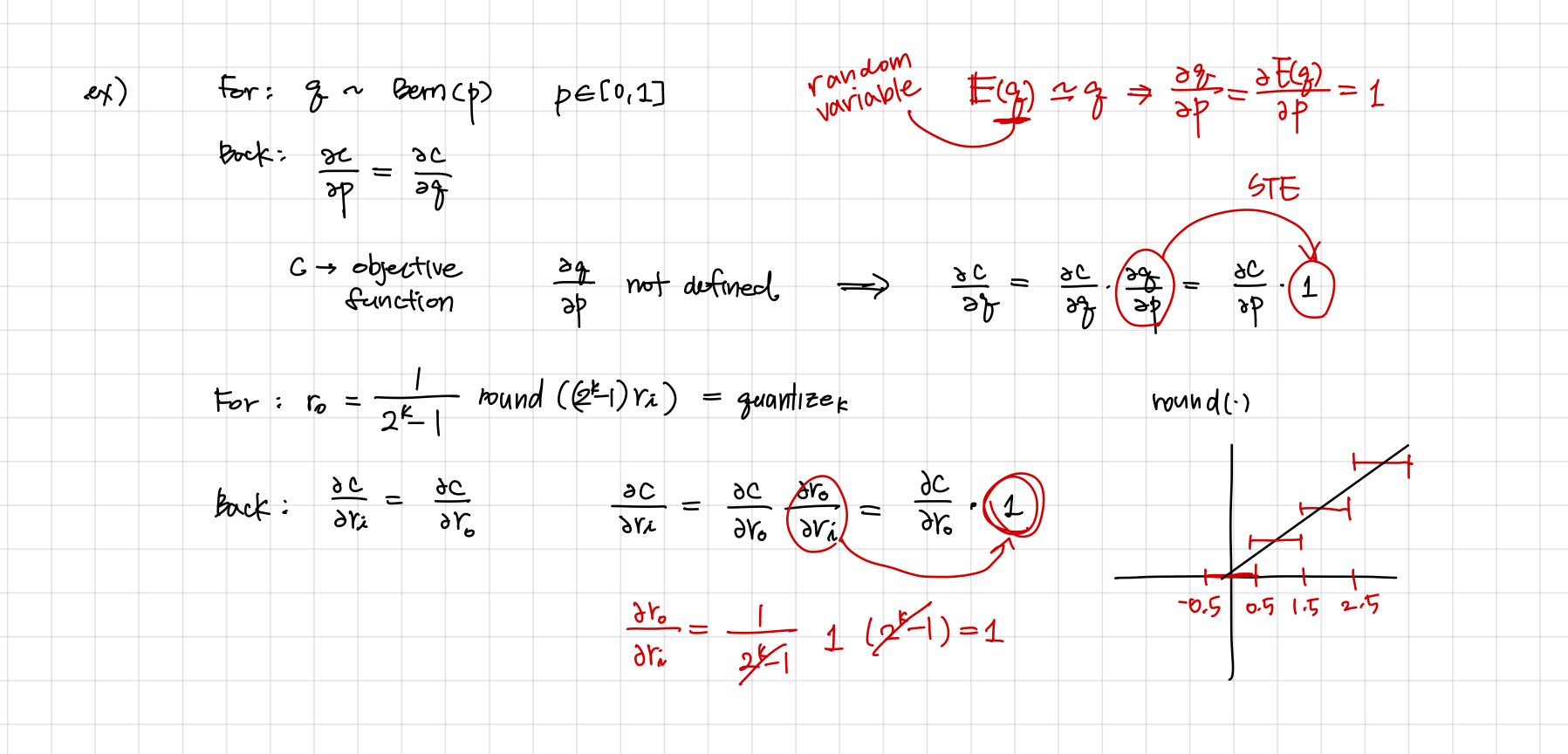

2.2 Straight-Through Estimator